Autonomous Vehicle Patent Application, published 06-July-2017

Cruise Automation, GM’s expensive Silicon Valley outpost, is seeking to patent “A method for controlling an autonomous vehicle at an external interface, the method comprising: at an external interface, operably in communication with the autonomous vehicle: allowing an entity in a proximity of an outside of the autonomous vehicle to interact with the external interface; receiving, from the entity, one or more control inputs; and controlling, by the entity, one or more operations of the autonomous vehicle based on the one or more control inputs received using the external interface.” See US 20170192428. At first glance it seems that every $20 remote controlled toy car reads on these claims…

US 20170192423 to Cruise Automation seems to acknowledge the reality that autonomous vehicles may get stuck and need help. The patent application describes a method which includes generating an “assistance request” and foresees a human expert to get involved.

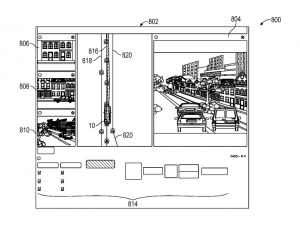

In the same field GM’s patent publication US 20170192426 describes “A method for controlling an autonomous vehicle, the method comprising: determining that assistance is required for continued movement of the autonomous vehicle; and facilitating movement of the autonomous vehicle via implementation, by a processor onboard the autonomous vehicle, of manual instructions provided from a remote user that is remote from the autonomous vehicle.” The application includes an envisioned user interface showing life video from several cameras around the autonomous vehicle. The concept seems realistic: The OEMs will have lots of data about their autonomous vehicles, including life data (at least on request) from the cars.

Sony hasn’t really stood out in the autonomous car space. But apparently they are thinking about it. In US patent application publication 20170190331 they claim “A system for controlling a vehicle, said system comprising: […] an ECU […] to: detect a horn sound emanated from a second vehicle; capture first sensor data associated with said first vehicle based on said detected horn sound, wherein said captured first sensor data indicates a first traffic scenario in a vicinity of said first vehicle; extract second sensor data associated with said first vehicle for an elapsed time interval prior to said detection of said horn sound; and recalibrate one or more control systems in said first vehicle to perform one or more functions associated with said first vehicle based on said captured first sensor data and said extracted second sensor data. ” Simply: The autonomous car is supposed to react to a horn. I like the idea: Honk at Sony’s car and it moves over to let me pass.

Ford is concerned with operating autonomous vehicles around emergency vehicles and suggests that an approaching emergency vehicle may cause the autonomous vehicle to enter a special “emergency autonomous mode” or kick out of autonomous into manual mode. (US20170192429). The suggested claim, as always, is broad: ” […] detecting an emergency vehicle near a host vehicle, wherein the host vehicle is an autonomous vehicle; receiving operational data from nearby vehicles, the operational data indicating whether one or more of the nearby vehicles is operating in an autonomous mode; and transmitting the operational data to the emergency vehicle. ”

GM’s publication 20170190334 presents “A method for predicting turning intent of a host vehicle when approaching an intersection, said method comprising: obtaining a plurality of environmental cues that identify external parameters at or around the intersection, said environmental cues including position and velocity of remote vehicles; obtaining a plurality of host vehicle cues that define operation of the host vehicle; and predicting the turning intent of the host vehicle at the intersection before the host vehicle reaches the intersection based on both the environmental cues and the vehicle cues.”

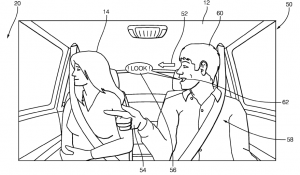

Delphi’s patent publication 20170191838 may start routing autonomous cars through red light districts – if that happens to be a route where the occupant detection system senses occupant interest…: “A navigation system suitable to use on an automated vehicle, said system comprising: an occupant-detection device used to determine a degree-of-interest of an occupant of a vehicle, said degree-of-interest determined with regard to a route traveled by the vehicle; and a memory used to store an interest-score of the route, said interest-score based on the degree-of-interest exhibited by the occupant while traveling the route. ”

Delphi’s patent publication 20170191838 may start routing autonomous cars through red light districts – if that happens to be a route where the occupant detection system senses occupant interest…: “A navigation system suitable to use on an automated vehicle, said system comprising: an occupant-detection device used to determine a degree-of-interest of an occupant of a vehicle, said degree-of-interest determined with regard to a route traveled by the vehicle; and a memory used to store an interest-score of the route, said interest-score based on the degree-of-interest exhibited by the occupant while traveling the route. ”

Google, also, seem determined to find out where the driver should go without asking (US 20170193627): “1. A method for facilitating transportation services between a user and a vehicle having an autonomous driving mode, the method comprising: receiving, by one or more server computing devices having one or more processors, information identifying a current location of the vehicle; determining, by the one or more server computing devices, that the user is likely to want to take a trip to a particular destination based on prior location history for the user; dispatching, by the one or more server computing devices, the vehicle to cause the vehicle to travel in the autonomous driving mode towards a location of the user; and after dispatching, by the one or more server computing devices, sending a notification to a client computing device associated with the user indicating that the vehicle is currently available to take the user to the particular destination.” So Google will dispatch the vehicle to take you to a place they determined – not one the user requested. We’ll drive you to the mall – or you can stay here.