Automatic Emergency Braking Failure Rates (Mobileye)

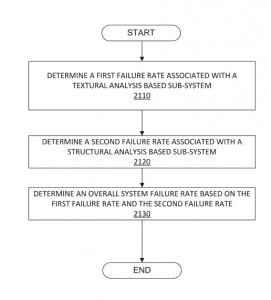

Mobileye’s patent application publication 20170203744 claims a method for assessing an overall system failure rate associated with a braking decisioning system for a vehicle. The system is based on the analysis of video data. It calculates a first failure based on a change in texture between images and a second failure rate based on optical flow. The specification explains that by taking two independent channels of information into account in the braking decisioning for a vehicle, e.g., by using the texture based analysis and the structure based analysis, a vehicle system may be able to assess the failure rate of each sub-system (e.g., textural analysis based sub-system and structural analysis based sub-system) separately. Such failure rates may be multiplied, and a result of the multiplication may be used to assess the MTBF.

Mobileye’s argument seems theoretically sound: Connecting two independent subsystems such that a system failure only materializes if both subsystems fail concurrently would allow to multiply the individual subsystem failure rates. A twin-engine airplane becomes a glider only if both engines fail. But Mobileye’s argument doesn’t fully convinced me, since in practice one will have to proof that optical flow and texture analysis are truly independent – which likely they are not. Just like both engines of a twin-engine plane fail if the plane runs out of fuel, a particular visual effect may cause both optical flow and texture analysis to reach the wrong conclusion.

Also, Mobileye’s patent application is based on the use for emergency braking systems, where “not braking automatically” is considered a safe state. The analysis fails for automated vehicles when “not braking when needed” is a serious failure mode. That is one of the key problems with autonomous vehicles: For years we have optimized driver assistance system by relying on the human driver as the ultimate decision maker. Whenever sensor data became inconsistent or algorithms weren’t sure what to do we let the human take care of the problem and have the automated system do nothing: If texture analysis and optical flow disagree, let the human sort it out. In autonomous vehicles the logic no longer works. Preventing false brake activations and preventing missed brake activation are inherently conflicting goals. The failure rate of false brake activations inevitably influences the failure rate of missed brake activations. Would be really nice to see how Mobileye will solve that problem…